I finish a ticket, push up, and wait.

The build kicks off, so I context-switch. Another task, Slack, Linear, maybe a review on someone else's PR. By the time I loop back, it's done.

Five minutes gone, barely noticed. Or worse, it fails. Repeat, 5 becomes 10. Merge conflicts. Repeat, 5 is now 15. Merge, regenerate, redeploy. 20.

With the rise of Claude Code, Codex, and Opencode, this is amplified the more you parallelize work and output increases.

It goes without saying, this shouldn't be a bottleneck where avoidable. But that's the exact problem… no one says anything. Not because they don't care, but because everyone is so focused on shipping they barely notice.

Classic boiling frog. 🐸

First up: replacing Next.js with plain React, Vite, and TanStack Router in our dashboard.

Why we migrated away from Next.js

This should not be taken as a slight to Next.js, as we’re still big fans of the framework. We still use it for our website, and for another one of our apps. It’s a great framework which lives at the edge of React.

So why move?

- Build times. 4.5-6m for the dashboard.

- Local development resource usage. Was minimum 8GB RAM used, up to 15GB at times (and reportedly more). This was even with turborepo.

- Page loading times locally. Clicking on a page and waiting 10-20s was not only frustrating, but another instance of boiling frog time accumulation.

- Our dashboard doesn’t really benefit from SSR enough to justify the framework. We don’t use Next.js API routes. We only use middleware for ui-blocking permissions.

- Dashboard has been soft locked on old version of Next.js because of dependencies and large codebase changes needed to bump. Last attempt failed and has been parked since.

- Lastly, we’re a team of product engineers, mostly leaning towards backend, including myself. We try to move fast and build well. Stressing while fighting code around server component hierarchy and behaviors with App Router is a waste of our time as a startup.

As Alguna embraces AI engineering, parallelizing tasks is becoming common. This creates additional emphasis on build times and local development. Even on beefy machines, being unable to run multiple instances of the dashboard is a dealbreaker.

Truth be told, we possibly could have invested further into trying to improve performance. Maybe re-trying bumping Next versions. Potentially try Vercel’s profiling tools to help.

Aside from time investment, however, from prior experience, there's a ceiling. A lot of these issues are due to core behavior of how Next.js operates in relation to RSC’s and SSR.

So, what next?

The capability increase from AI coding agents, namely Claude Code, allows us to experiment entirely new solutions with far less commitment.

We wanted to stay within the React ecosystem to make the migration more seamless and keep to patterns we knew. This does eliminate some interesting choices such as Deno Fresh, Svelte, Vue and Solidjs.

So I took a look across the market:

- TanStack Start has taken the internet by storm in the last few months. I’ve been following it since it was in its early days, due to being a big fan of TanStack Router, Query, Table, and honestly, everything else they touch.

- Remix. None of us have ever used it. Has some promising concepts. But they seem to be changing direction again which concerned us.

- React Router. Tied to remix and likewise seems to be unclear where the team is heading.

- TanStack Router. Lots of improvements over React router and built by the TanStack team.

So we chose to go down the route of TanStack, with React Router in mind as a fallback if we weren’t sold. Hint: we were

Why TanStack Router over TanStack Start?

Initially, I chose TanStack Start. I was open to the idea of staying within the world of SSR if it was performant, and if our other red flags got fixed. This would allow us to keep our current system similarly in-tact. Just converting over from RSC’s to TanStack Start SSR where applicable.

This was actually successful, and it was very performant. Local RAM usage immediately was fixed, hovering around 600MB-1.5GB on average. Build times on Vercel were instantly 3x faster at ~1m30s. Page load times were maximum of ~3s worst case, but often far faster.

But ultimately… we decided we were sick of the pain surrounding SSR. TanStack Start at the time didn’t support RSC’s, and is in release candidate stage. So, for now, we chose get the stability and DX of TanStack Router to eject from RSC’s. We are intentionally keeping ourselves open to returning if TanStack Start fixes a lot of the issues.

Once we stripped away TanStack Start and went with pure TanStack Router, React and Vite, we saw similar, or even stronger benefits. RAM usage ~800MB-1.2GB which is still far lower than Next.js, build times at 59s average and local page loading times are max ~1s for first load, which we will be addressing in the coming weeks.

Claude Code: The golden bullet for fast migration

Even a bit over a year ago, this would’ve been a high lift process to do as little as experiment with another framework with the app, let alone migrate away.

We had jokingly tried to one-shot a TanStack migration during our offsite but obviously ran into a mess. With proper focus I was able to get our whole dashboard functional in a little over a weekend by using task orchestration with Claude.

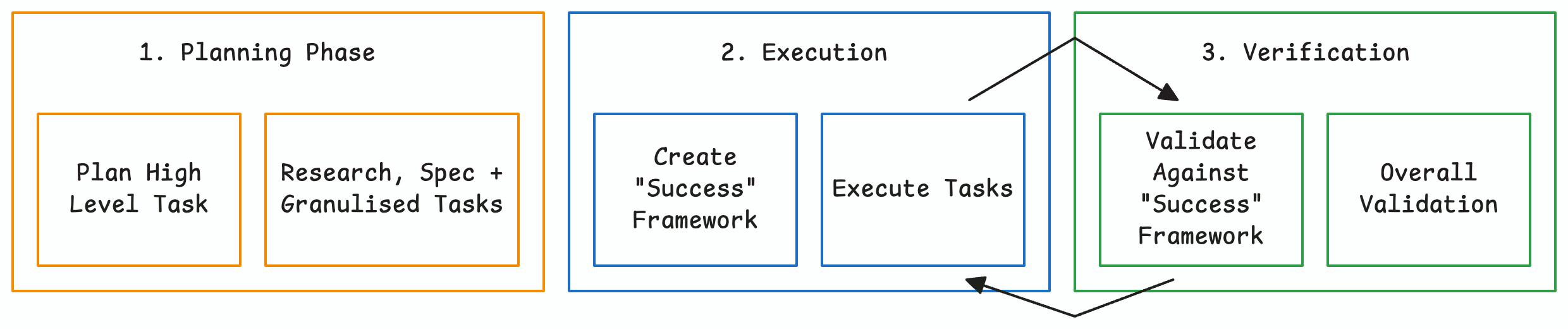

I personally use a rough framework for all of my AI development which I’ve found dramatically increases output quality. It looks extremely basic and akin to standard software development.

But most people skip steps, not realizing the importance to each in relation to quality of AI engineering.

Migration process: Step-by-step

Execution was very straight forward and followed the above:

- Split the migration into clear groups or phases for individual plans. These are then researched, and granulized separately. TanStack docs were great here for the agents clarity.

- Decouple individual components from RSC.

- Identify dependencies effected and plan any migration steps for each. For us, it was next + sub-packages, tailwind due to some setup issues, clerk, and a handful of others.

- TanStack core setup and local dev setup.

- Identifying parallel routes and converting to different patterns.

- Migrating all standard routes to TanStack and to use TanStack Query

- Any CI changes required

- Identify and fix any testing which would break

- Misc tasks such as env vars, auth, state, caches, url parameter usage and more.

- With each granulized task list, I used a task orchestrator to queue up these tasks for execution. Individual Claude Code instances were then spun up to begin execution.

These tasks were often strongly scoped, so the agent could complete its 1 task well (or set of many similar tasks), instead of many tasks with mixed results.- Define “success”, with a way to verify said success. This could be as simple as a successful build, or more complex like giving Claude browser control (agent-browser or Chrome) to manually verify components itself.

- Plan mode inside of Claude Code to explore unknowns from the plan before it started, and to have a focused-task.

- Execute tasks.

- Validation step would be partially automated by the agent to verify it’s own work. The secondary part, was me checking over the work personally to ensure that it has not done anything strange during that period of the migration.

- If it had deviated, I didn’t try to battle the agent more than 1 correction. I simply wiped it’s progress, and went back through with better clarity for the agent. Possibly updating the success framework here.

- Repeat steps 2+3, while doing manual check-ins between larger phases of work. This was to check for deviance and to add any missing tasks.

This process became mostly automated as I built out my task orchestrator. It also meant that the migration only required me to watch over, check work, and distribute tasks (eventually automated). Dependency trees within my task orchestrator system allowed me to control what could be done in parallel within git worktrees to stop conflicting agents.

By the end of the weekend, I had the whole app migrated and running thanks to Claude.

Yes, myself and Claude had both missed things.

Yes, some things were broken.

But for majority of the app, it was migrated with no issues. Perfection came later.

The following weeks were then squeezing in QA time, and continually merging in other changes. The final stage was deploying under our sandbox environment which has test data. We saw only minor interface issues, which we patched and subsequently went to production.

Results, pain points, and additional benefits

We are extremely happy with our choice to move, and will be considering moving our other apps with similar constraints off of Next.js.

Now, let's take a look at the before and after.

Before

Here, I'm navigating on a fresh development server to our subscriptions and invoices pages. On Next.js, it takes 10s for invoices (and around 14s for subscriptions).

// layout.tsx

export default function Layout(props: { children: ReactNode }) {

return (

<PageLayout

pageTitle={plansConfig.mainNav.text}

PageButtons={<PlanPageActions />}

sticky={true}

>

<Section spacing={"lg"}>

<Suspense

fallback={

<Section spacing="md">

<TableLoading colCount={4} />

</Section>

}

>

{props.children}

</Suspense>

</Section>

</PageLayout>

);

}

// page.tsx

const getPlans = async (

client: ClientInstance,

{ page, limit }: PaginationSearchParams,

) => {

return await client.pricing.PlanList({

limit: limit ? parseInt(limit) : 1000,

page: page ? parseInt(page) : 1,

});

};

const PlanList = async ({ searchParams }) => {

const quoteTemplates = await executeServerSideRequest({

fn: getPlans,

params: searchParams as PaginationSearchParams,

});

return (

<>

{quoteTemplates.items.length === 0 ? (

<PlanEmptyState />

) : (

<PlansTableContainer data={quoteTemplates} />

)}

</>

);

};

export default withPermissionGuard(PlanList, Permissions.PlanView);

After

TanStack Router almost immediate. This is because of Next.js having on-demand compilation on top of server side rendering. Plain React, Vite and TanStack Router does not face either of those overheads.

export const Route = createFileRoute("/_authenticated/plans/")({

component: withPermissionGuard(PlansPage, Permissions.PlanView),

validateSearch: zodValidator(searchSchema),

});

function PlansPage() {

usePlansPageTitle();

const { page, limit } = Route.useSearch();

const { data, isPending, error } = useQuery({

...planListQueryOptions({

query: { page, limit },

}),

});

if (error) {

return (

<ErrorPage item="plans" />

);

}

return (

<PageLayout

pageTitle={plansConfig.mainNav.text}

PageButtons={<PlanPageActions />}

sticky={true}

>

<Section spacing="lg">

{isPending ? (

<TableLoading colCount={5} />

) : data && data.items.length === 0 ? (

<PlanEmptyState />

) : data ? (

<PlansTableContainer data={data} />

) : null}

</Section>

</PageLayout>

);

}

So what did the team have to say after the move? I think the comments speak for themselves.

"The dashboard 🥹 it’s so fast!"

"Looks excellent…. and fast."

"Amazing!! My laptop and patience deeply thank you, Reece!"

Additional benefits

- Faster tests due to migrating to Vitest from Jest

- Fixed lingering styling issues tailwind v4 parsed better

- React 18→19, React PDF + React Email major version bumps

- Persisted query cache creating better UX, since it’s easier to manage from full client-side

- Less layout shifts and UI hacks

- Type checking time reduced from 30s+ to ~3-5s via tsgo

Migrations don’t come without their own paint points, however!

Pain points

- TanStack Start is still new, so I found that part of the migration a little shaky at times trying to debug issues, even with Claude’s help. It has stabilised very quickly, but it is still a release candidate, so should be noted when migrating.

- We had a lot of side effects we didn’t expect during the migration. Tailwind v3 → v4 being the main change, which caused a lot of UI shifting. This was no fault to TanStack, but if you can, I’d recommend avoiding dependency bumps in any way you can.

- The bottleneck became us humans being able to verify flows. We have far heavier testing on the backend of our app than frontend, so we had to do more hours than ideal to verify.

I had offloaded some of this to agents in a similar verification loop to above, but lacked proper documentation of complex scenarios for the agents to test. - Moving is only the first step. We already have identified different things we’ll need to address, such as first-paint loading and data patterns.

Regardless, if you're having issues with your framework, I highly recommend evaluating your options. There might be something better suited to your team. With the reduced cost of experimentation thanks to AI, it’s worth the investment.

In our next blog post, I’ll explore some of the backend and CI improvements we are making as a team.